The UK Government’s Proposed Requirements to Protect Children from Toxic Algorithms

The rise of digital technology has brought many benefits to society, but it has also raised concerns about the negative impacts it can have, especially on children. One significant area of concern is the use of algorithms that may expose children to harmful or toxic content online. In response to this issue, the UK government has proposed a series of requirements aimed at protecting children from such harmful algorithms.

One of the key requirements proposed is the establishment of a robust regulatory framework to govern the use of algorithms in online platforms targeted at children. This framework would set out clear guidelines for developers and platform operators on how algorithms should be designed and implemented to ensure they do not harm children or expose them to inappropriate content. By establishing clear rules and standards, the government aims to hold platforms accountable for the algorithms they use and ensure that children are not put at risk.

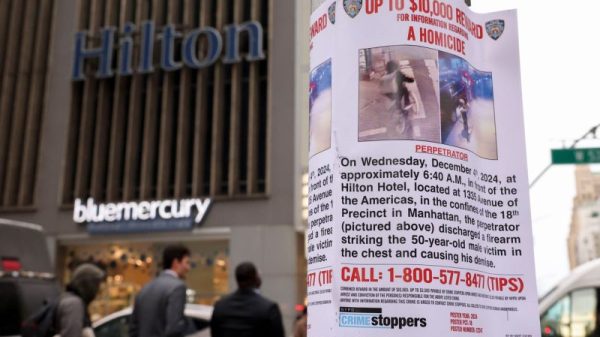

Another important requirement proposed by the government is the implementation of age verification mechanisms to prevent children from accessing age-inappropriate content. This would involve platforms verifying the age of users, especially when it comes to content that may be harmful or unsuitable for younger audiences. By implementing robust age verification processes, platforms can better protect children from exposure to toxic content that may have long-lasting negative effects on their mental and emotional well-being.

Furthermore, the government has proposed requirements for platforms to provide transparency about the algorithms they use and the data they collect from children. This would involve platforms being more open about how algorithms work, what data they collect, and how this data is used to personalize content for users. By increasing transparency, children and their parents can have a better understanding of how algorithms may impact their online experiences and make more informed choices about the content they engage with.

Additionally, the government has proposed requirements for platforms to provide easy-to-use parental controls that allow parents to monitor and control their children’s online activities. By giving parents the tools they need to oversee their children’s online interactions, platforms can empower parents to protect their children from harmful algorithms and content. These parental controls may include options to limit screen time, block certain types of content, or monitor a child’s online behavior to identify potential risks early on.

Overall, the UK government’s proposed requirements to protect children from toxic algorithms demonstrate a commitment to safeguarding the well-being of young users in the digital age. By establishing clear guidelines, implementing age verification mechanisms, increasing transparency, and providing parental controls, the government aims to create a safer online environment for children to explore and learn without the risk of harm from harmful algorithms. As technology continues to evolve, it is essential for regulators and policymakers to adapt and develop measures that prioritize the protection and welfare of children online.